The project of savla.dev (pronounced as ‘shah-vlah-dev’) was established around January 2022. Since then the project/organization has got three stable memebers and some more volatile contributors and collaborators as well.

Fig. 1: Expanded high-resolution organisation logo.

goals

The main project’s goal is to study, communicate and serve free and open-source software (FOSS), to contribute to the community, and to help small businesses into the world of Internet and cheaper software to lower the outcomes a little.

We do want to grow, but at the same time we want to provide others interested in FOSS to grow together stronger (monke stronger together).

infrastructure

All our nodes are deployed as VMs (except for hypervisors) using virt-install tool via libvirt API. OS installation is unattended, we use RHEL-like linux distros like Almalinux 9 with kickstart config file (and anaconda/dracut executor). After the initial installation, Ansible base role is applied to ensure unified baseline configuration for all hosts. This includes basic firewall settings, cloud users to be added with the groups permissions too. Moreover, some config files such as .vimrc are deployed to each user’s home directory as well.

Virtual servers are usually deployed with container Ansible role over the top. This role ensures docker engine installed for docker containers to be run there. We use those container technique a lot, and we code our apps/tools to be compatible, runnable in Docker from the beginning (mostly). It is planned to use some kind of cloud orchestration — Kubernetes, or Docker Swarm (or possibly Nomad by Hashicorp too).

Ansible is also used to deploy external (public) domains forwarding to savla.dev site (domain parking), or internal postfix relays. The challenge here is to ensure services working with SELinux constrictions too.

core

Our infrastructure core consists of two DigitalOcean’s Droplets (VPSs, edge nodes) in different European locations to ensure high-availablity for the HTTP/S services primarily. Those Droplets are interconnected with our hypervisors using wireguard UDP VPN tunnels, each edge node having its own subnet routed through the network too.

DNS

As IP addresses may change time to time, we want our apps to be bind to one particular address, name address. For this purpose, we implemented internal DNS server with internal domain names (e.g. savla.net is private, thus not reachable from the Internet). Server itself runs on bind9 and is run in Docker container. All zones and configuration files are as code, in private GitHub repository. This repository has also CI/CD pipeline(s) set to redeploy server on push. One can then edit any zone file, commit, push to master branch and the changes take effect in like a minute (at the moment, DNS cache is wiped too at redeploy).

HTTP proxy

Cloudflare HTTP Proxy acts as the very main edge proxy, which forwards (and hides) requests to our infra. At the moment, two edge virtual servers are taking care of HTTP request redistribution to particular backends. Those edge nodes use nginx reverse proxy and serve HTTPS traffic from the Cloudflare proxy. The proxied traffic sent to backends are then proxied again using traefik reverse proxy, which dynamically links HTTP flow to the deployed containers stack.

monitoring

For monitoring purposes, we have got node exporter installed on every node. Metrics are scraped by prometheus and visualized by grafana Dasboards. Moreover, we use our internal monitoring service called dish, which is a small Golang binary, that is given a socket list to check and it returns its results to Prometheus pushgateway to be scraped later (and then visualized in Grafana).

documentation

Internally, we also do have a documentation system built above Harp node.js server. We populate it with markdown-formatted files and Harp parses them to static HTML files to production use. We tend to write self-documenting code, or we document code directly inline-style. As in many organizations, we kind of struggle to have unified infrastructure documentation (to be done soon!).

information system

To ensure one (along with Ansible as code) source of truth, we develop our internal information system called swis — sakalWeb Information System version 5. As swis acts only as a backend embedded database, it has its RESTful JSON API used by a few frontend applications.

backup offloading idea

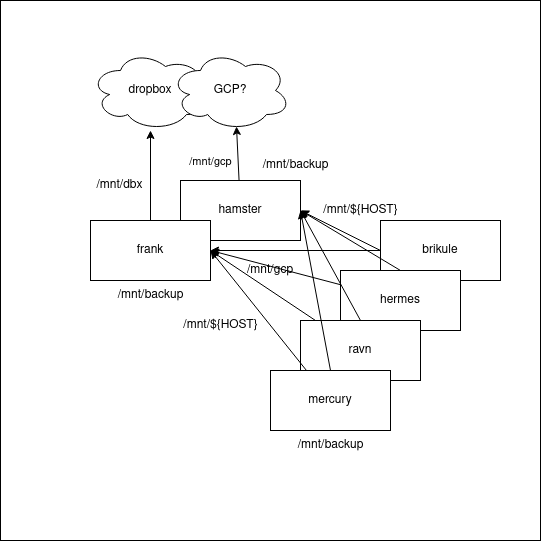

Fig. 2: Backuping and backup offloading idea diagram schema.

Fig. 2: Backuping and backup offloading idea diagram schema.

more

Find out more on our GitHub site, and on our very homepage. Feel free to give us a feedback if you like so.